The last year has produced a succession of Black Mirror-style moments, but one of the most memorable was the arrival of an AI tool that could animate our old family photos with disturbing realism.

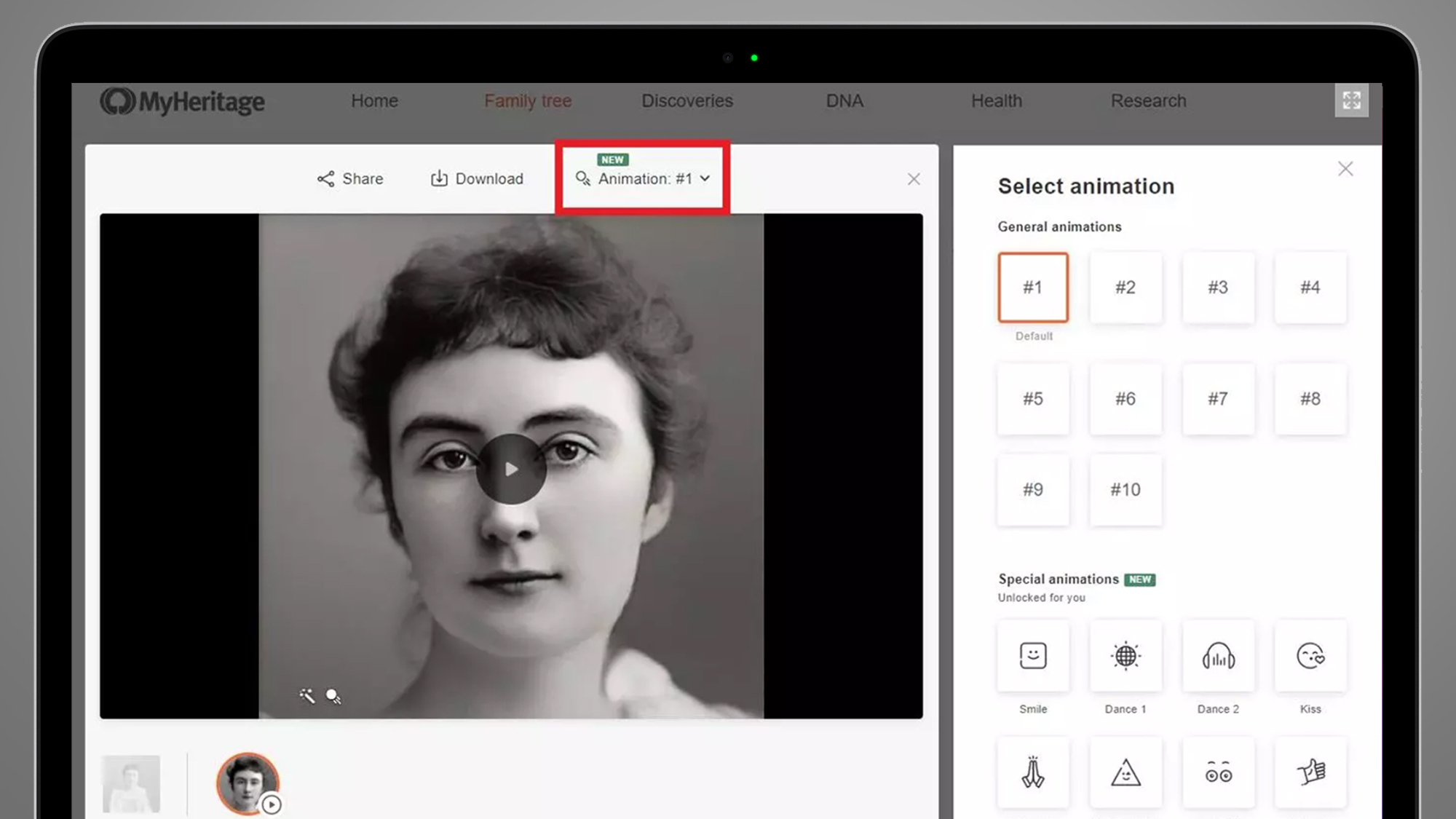

Called 'Deep Nostalgia', it landed in late February and charmed social media with its ability to quickly and convincingly animate all kinds of faces: distant relations, statues, tattoos and, of course, the poster for The Nightmare on Elm Street.

Like pretty much everything, the tech divided internet opinion. Some found it to be a heartwarming cheat code for 'meeting' old relations they'd never met. Others felt it bordered on necromancy. Most of us were probably in a conflicted space somewhere in between. But the big news for photography is that it now exists – and in a form that has massive mainstream potential.

But how exactly does AI and machine learning reanimate your old family photos? And where is this all heading? 'Deep Nostalgia' came from the genealogy firm MyHeritage, but it actually licensed the tech from Israeli company D-ID (short for 'De-Identification').

We had a fascinating chat with D-ID's Co-Founder & CEO Gil Perry to find out the answers to those questions – and why he thinks most visual media on the internet will be 'synthetic' within the next decade...

Scary movies

D-ID's 'Live Portrait' tech might be new, but its fundamentals aren't. Facial re-animation based on machine learning was demoed as far back as 1997, while in 2016 the Face2Face program gave us 'Deep Nostalgia' chills by turning George Bush and Vladimir Putin into real-time digital puppets.

But in the past few years the tech has made a crucial leap – from the mildly reassuring confines of university research papers and onto our smartphones. With free services like Deep Nostalgia and Avatarify able to whip up convincing videos from a single still photo, Pandora's re-animated box has been flung open.

For a while now, it's been relatively easy for computers to invent a new person in photo form – if you haven't seen it before, we apologize for sending you down the rabbit hole that is This Person Does Not Exist, which itself went viral in 2019.

What's much harder is convincingly generating a moving person from a single still image, including information that simply isn't there. This is what D-ID has seemingly managed to crack. As Gil Perry told us: "The hard part is not just transforming the face and animating it. The rocket science here is how to make it look 100% real."

According to Perry, the biggest challenge D-ID had to overcome with its 'Live Portraits' was the lack of information you get from a single photo. Earlier attempts at facial re-animation have required lots of training data and also struggled with 'occlusion' (parts of the face being obstructed by hands or other objects). But this is something D-ID has made big strides in.

"The hard part is when you have no different angles – for example, you can upload a photo which is very frontal and without teeth," he said. "Our algorithms know how to predict and create the missing parts that you didn't have in the photo – for example, ears, teeth, the background. Basically, we cross what people call the uncanny valley."

The internet's mixed response to 'Deep Nostalgia' (which is based on D-ID's tech) perhaps shows it hasn't fully traversed that threshold yet, but it's certainly making good headway. It recently added new 'drivers', or animations, including the ability to make your subject blow a kiss or nod approvingly. And this is just the start of D-ID's re-animating ambitions.

You D-ID what?

The reason why D-ID's 'Live Portrait' tech is so adaptable (it's being used in everything from museum apps to social networks) is because flexibility is baked into its process. So how exactly does it work?

"The way that the live portrait works is that we have a set of driver videos," says Perry. "We have about 100 of these movements. When a user uploads a photo, the company uses our API. Then our algorithms know how to transform landmarks, a set of points on the face of the still image, to act and move in a similar way to the landmarks or dots on the face of the driver video."

Crucially, the people and companies that license D-ID's tech aren't restricted to its own library of movements – they can also create their own. "Currently, we have enough drivers, but some of our customers work to create drivers by themselves," Perry explained. This also powers another D-ID product called 'Talking Heads', which turns text or audio into realistic videos of people talking.

D-ID's 'Talking Heads' feature has massive implications for movies and YouTube. In theory, YouTubers could simply script videos in their PJs and leave the presenting to their virtual avatars. But for photography, 'Live Portraits' is the big bombshell – particularly for stock photo companies.

"For them, this can really be a game-changer for two reasons," Perry said. "One, we can transform all their photos into videos. And two, when looking for a photo, most of the time the user doesn't find exactly what they need. We can change the expression – if you want the person to be a little bit happier or look in a different direction, we can change all that in a click of a button."

It's one thing impressing Twitter or TikTok with an animated photo, but quite another convincing stock photo veterans that a digitally-altered facial expression can meet their exacting standards. Is the tech really sufficiently cooked for professionals like that? "Yes, we are already doing that," Perry said. "We are selling to photographers and progressing fast with the largest stock footage companies. We also have this month another public company that is very famous for photo albums and photo scanning."

In this sense, re-animation tech from the likes of D-ID is challenging the definition of what a photo actually is. Rather than a frozen moment in time, photos are now a starting point for AI and machine learning to create infinitely tweakable alternate realities. Photography has been susceptible to manipulation since its birth, particularly in the post-Photoshop era. But since creating realistic videos from a single still image is a whole new ball game, isn't there serious potential for misuse?

Cruise control

Most of the big social networks, including Facebook and TikTok, have banned deepfakes, which differ from the likes of 'Deep Nostalgia' by being designed to deceive or spread false information. But even innocent implementations of the tech, like D-ID's 'Live Portraits', could theoretically turn malicious in the wrong hands.

Fortunately, this is something that D-ID has considered. In fact, the company actually started life in 2017 as an innovator in privacy tech that guarded against the rise of face recognition. When Perry created D-ID with his co-founders Sella Blondheim and Eliran Kuta, they made a facial de-identification system (hence the company name) whose aim was to be a privacy-enhancing firewall for photos and videos.

According to Perry, this is a fairly solid building block from which to build safe AI face tech. "When we decided to enter this market, we understood that there is a potential for doing bad things with such technology," he said. "This would have happened with us entering or without. We decided that we are going to enter and make sure we take the market in the right direction. Our mission was to protect privacy against face recognition. We have the right background and knowledge."

But it's also about putting practical buffers in place to make sure social media isn't flooded with malevolent Tom Cruises (or worse). No-one can just grab D-ID's tech off-the-shelf to make videos like the ones above, which still need advanced VFX skills, even if that one was made using the open-source algorithm DeepFaceLab.

"We are putting guard rails around the technology, so you cannot really do much harm with it," Perry said. "For example, you can see in 'Deep Nostalgia', it's only nostalgic and fun movements. We did a lot of tests to make sure that it just brings good emotions. We wrote an algorithm that we ran through Twitter and checked all the responses to see if they're positive or negative. We saw that 95% of them were positive."

On top of that, D-ID says it's building a manifesto that will be published soon and is working on ways to help organizations detect if a photo has been manipulated. "We are also insisting, when it is possible, that our customers add a mark that will make it clear to the watcher that this is not real photo or video", adds Perry.

Synth pop

This is all reassuring to those who may at this point be hyperventilating about the imminent demise of reality, or at least our ability to trust what we see online. And D-ID certainly sees its technology as pretty innocent. "We're basically transforming all the photos in the world to videos – we like to say we Harry Potter-ize the world" Perry says.

But there's also no doubt that technology like D-ID's has serious repercussions for our online media consumption. Photoshop may have democratized image manipulation in 1987, but the online world has long since moved onto video – after all, that's a big reason why 'Deep Nostalgia' was such a social media hit.

So how long will it be until the majority of the media we see on the internet is so-called 'synthetic media'? "I believe that in 5-10 years most of the media will be synthetic," Perry says. "I believe we are going to help make this happen closer to five years, and make sure that it's happening right."

There's a lot to iron out in the meantime, but in the short-term expect to see those 'Deep Nostalgia' videos get even more animated. D-ID can already animate family photos with several faces, and says that animating people's bodies is "in the roadmap". With Photoshop's recent 'neural filters' also joining the party, life is about to get very interesting for our photos and videos – let's just hope it's more than Harry Potter than Nightmare on AI Street.

from TechRadar - All the latest technology news https://ift.tt/37O3qz3

Aucun commentaire: